Forward-looking: Nvidia has been working on a new method to compress textures and save GPU memory for a few years now. Although the technology remains in beta, a newly released demo showcases how AI-based solutions could help address the increasingly controversial VRAM limitations of modern GPUs.

Nvidia's Neural Texture Compression can provide gigantic savings in the amount of VRAM required to render complex 3D graphics, even though no one is using it (yet). While still in beta, the technology was tested by YouTube channel Compusemble, which ran the official demo on a modern gaming system to provide an early benchmark of its potential impact and what developers could achieve with it in the not-so-distant future.

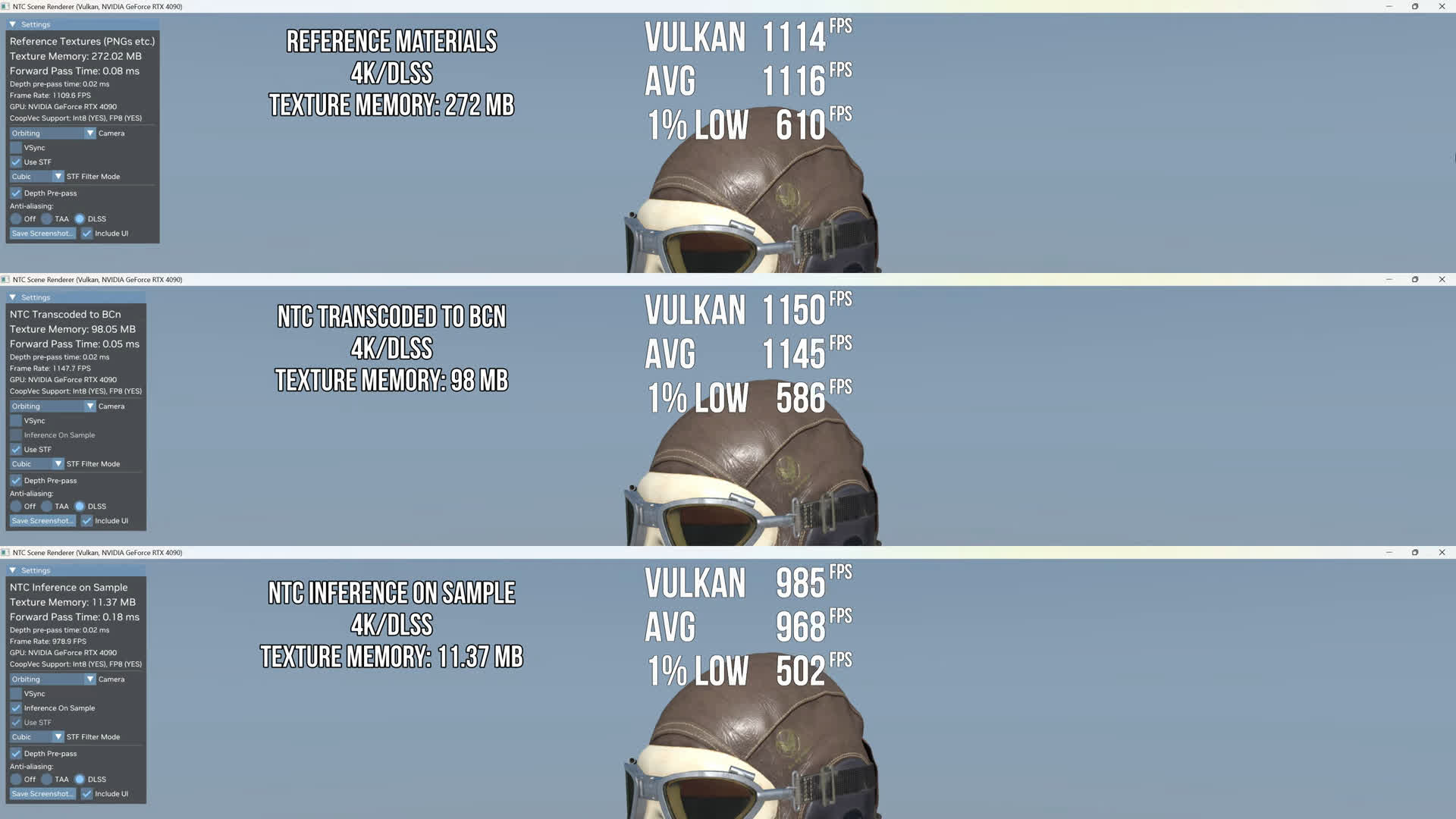

As explained by Compusemble in the video below, RTX Neural Texture Compression uses a specialized neural network to compress and decompress material textures dynamically. Nvidia's demo includes three rendering modes: Reference Material, NTC Transcoded to BCn, and Inference on Sample.

- Reference Material: This mode does not use NTC, meaning textures remain in their original state, leading to high disk and VRAM usage.

- NTC Transcoded to BCn (block-compressed formats): Here, textures are transcoded upon loading, significantly reducing disk footprint but offering only moderate VRAM savings.

- Inference on Sample: This approach decompresses texture elements only when needed during rendering, achieving the greatest savings in both disk space and VRAM.

Compusemble tested the demo at 1440p and 4K resolutions, alternating between DLSS and TAA. The results suggest that while NTC can dramatically reduce VRAM and disk space usage, it may also impact frame rates. At 1440p with DLSS, Nvidia's NTC transcoded to BCn mode reduced texture memory usage by 64% (from 272MB to 98MB), while NTC inference on sample drastically cut it to 11.37MB, a 95.8% reduction compared to non-neural compression.

Also read: Why Are Modern PC Games Using So Much VRAM?

The demo ran on a GeForce RTX 4090 GPU, where DLSS and higher resolutions placed additional load on the Tensor Cores, affecting performance to some extent depending on the setting and resolution. However, newer GPUs may deliver higher frame rates and make the difference negligible when properly optimized. After all, Nvidia is heavily invested in AI-powered rendering techniques like NTC and other RTX applications.

The demo also shows the importance of cooperative vectors in modern rendering pipelines. As Microsoft recently explained, cooperative vectors accelerate AI workloads for real-time rendering by optimizing vector operations. These computations play a crucial role in AI model training and fine-tuning and can also be leveraged to enhance game rendering efficiency.

Nvidia's new texture compression tech slashes VRAM usage by up to 95%