A hot potato: Upscaling has become a topic of contention since Nvidia and AMD introduced DLSS and FSR, with some users viewing the functionality as a crutch for unoptimized games. After unveiling new GPUs that rely heavily on image reconstruction and frame generation for their claimed performance gains, Nvidia revealed a data point suggesting that almost all RTX GPU users activate DLSS, meaning to indicate that upscaling has become the norm.

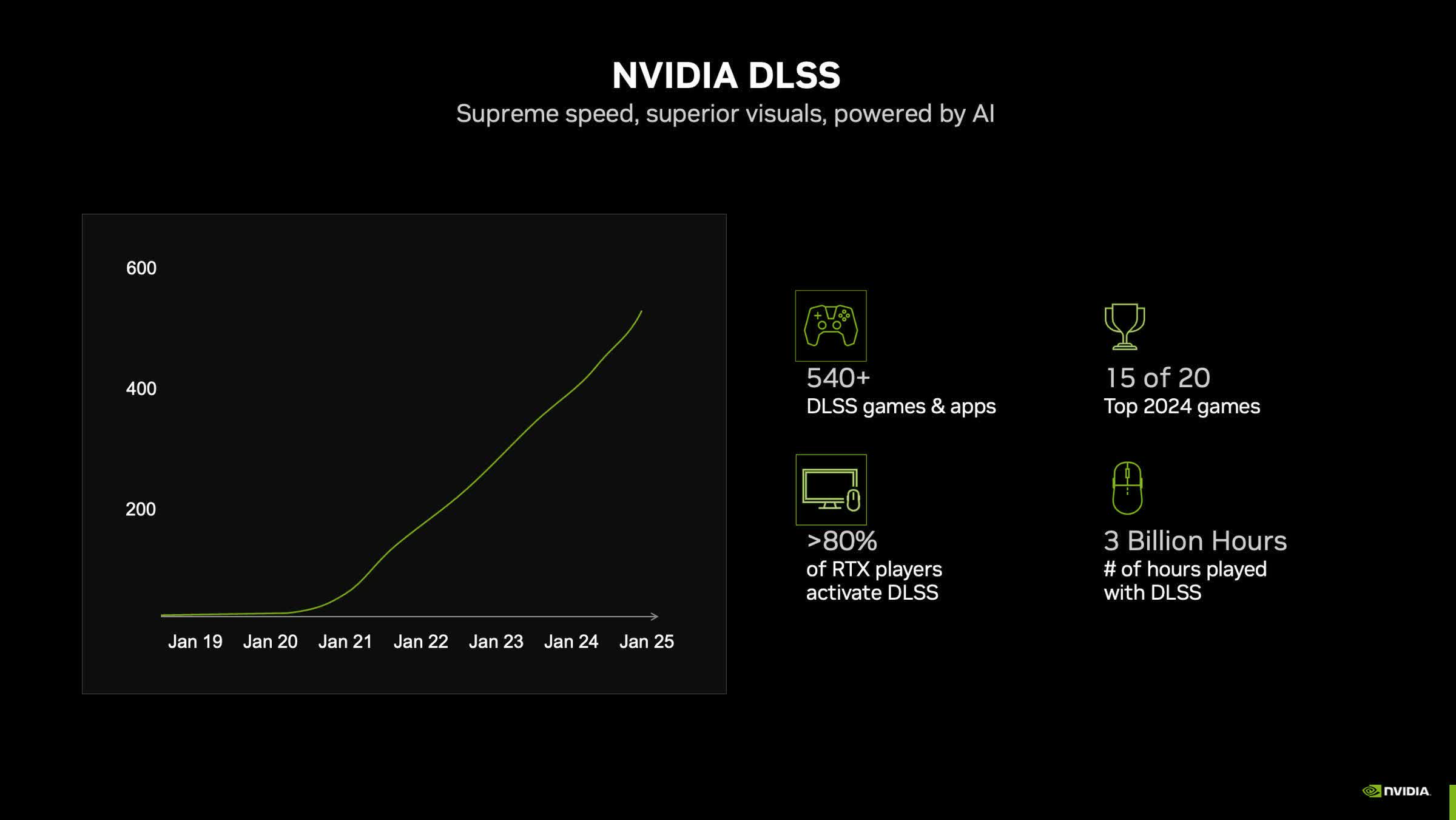

Nvidia held a lengthy presentation at CES 2025 outlining the past and future of its DLSS neural rendering technology. One slide included a data point claiming that over 80 percent of users with RTX 20, 30, and 40 series graphics cards use DLSS on games, vindicating the company's extensive use of AI in video game rendering.

While the slide does not specify how Nvidia collected the data, if accurate, it suggests that gamers have overwhelmingly embraced machine learning-based upscaling. The presentation also highlighted that over 500 games and 15 of 2024's top 20 titles support DLSS. However, it did not clarify whether most RTX 40 series owners specifically use frame generation, which is part of the DLSS suite but is a separate step than upscaling.

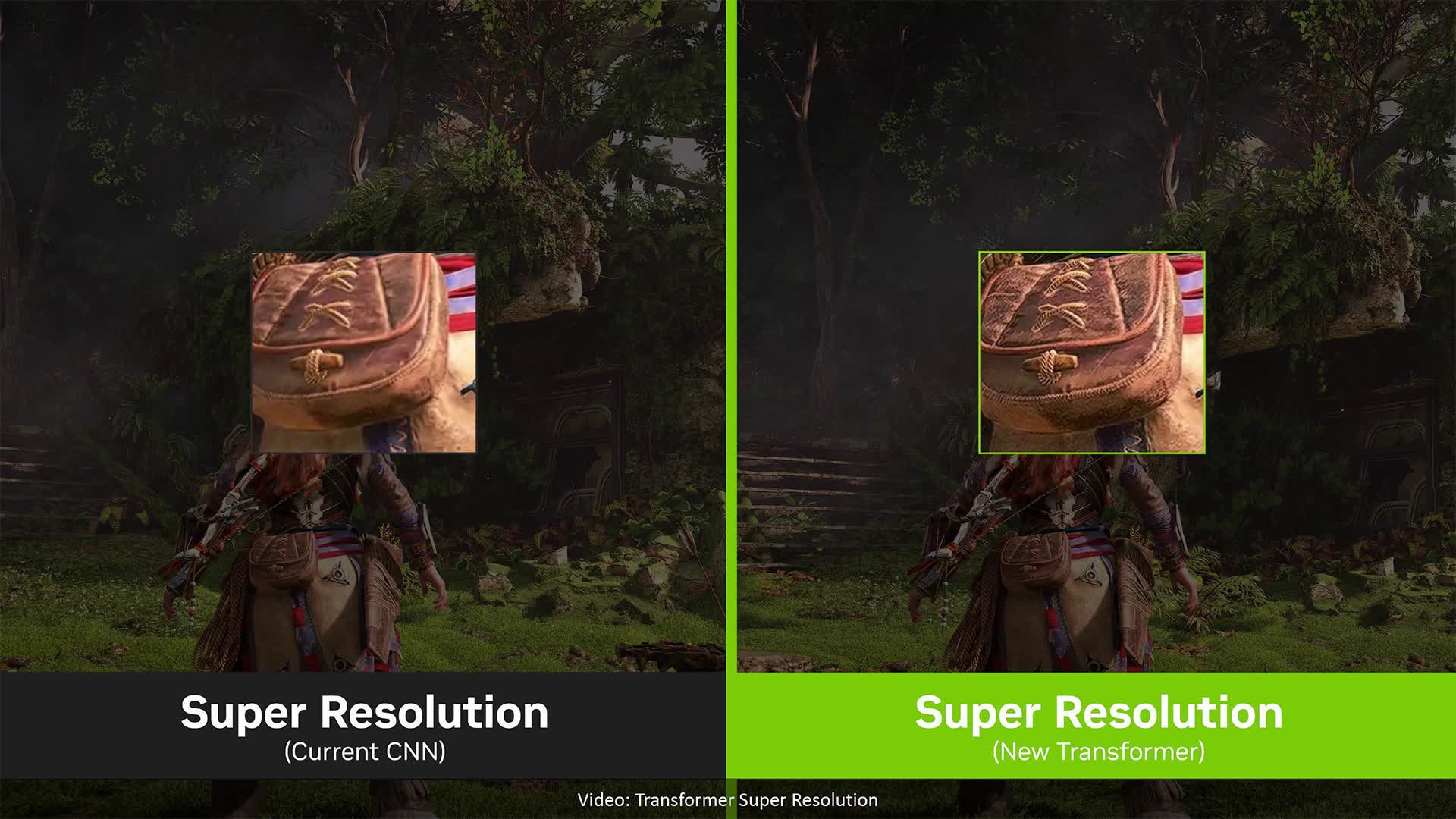

Upscaling technologies like DLSS, FSR, and XeSS render games below a display's native resolution and use advanced techniques to reconstruct the missing pixels, significantly boosting performance. Analyses from TechSpot and other outlets demonstrate that the increased frame rates often far outweigh the minor reductions in image quality. Nvidia's newly revealed DLSS 4 aims to minimize these visual flaws even further by using GenAI transformer models.

However, as games like Remnant II, Alan Wake II, and Monster Hunter Wilds now list upscaling as part of their system requirements, debate persists over whether the visual trade-offs are justified.

Additionally, Nvidia is promoting frame generation, which interpolates AI-generated frames between traditionally rendered ones without reducing latency, as a key feature of its RTX 50 series cards.

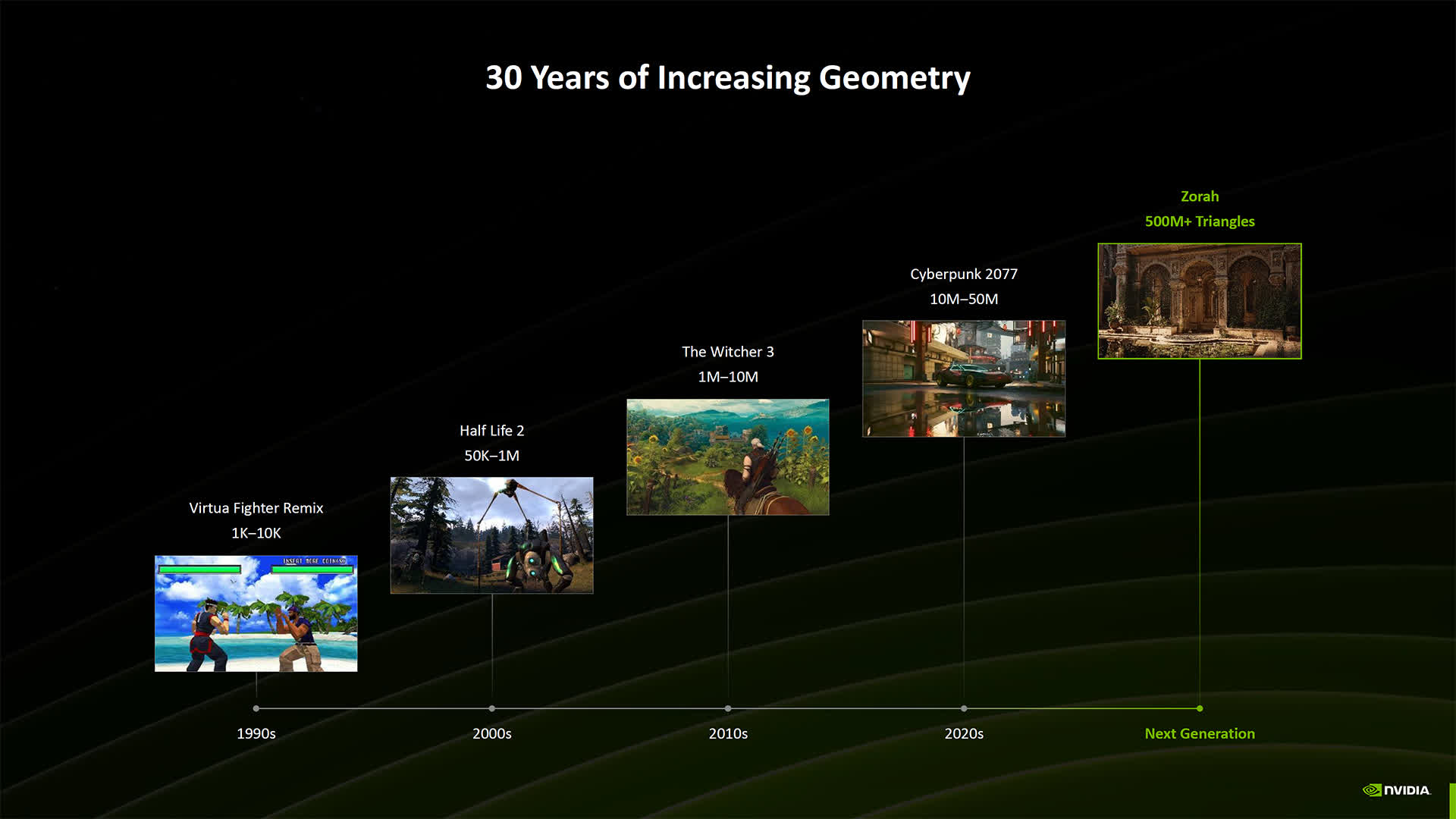

Nvidia's CES presentation also outlined future plans for neural rendering, which will integrate AI-assisted techniques into internal rendering pipelines instead of just resampling the final rendered frame. In the coming years, DLSS could enable games to process more detailed materials, hair, facial expressions, and other assets with minimal performance costs.

Nvidia has previously argued that as performance gains from conventional rendering and semiconductor die shrinks slow down, enhancing visual detail and frame rates will increasingly depend on technologies like neural rendering and frame generation. These currently address the substantial computational demands of 4K resolution and ray tracing.

Moreover, AMD's and Intel's efforts to play catch-up with Nvidia instead of providing alternative solutions further validate the growing importance of AI rendering. Team Red unveiled FSR 4 upscaling at CES, too, which mimics Nvidia's machine learning approach and achieves noticeably better results than FSR 3.

Similarly, late last year, Intel introduced new GPUs that support frame generation using XeSS 2. The shift toward AI-assisted graphics will potentially require new approaches to technical analysis and benchmarking.