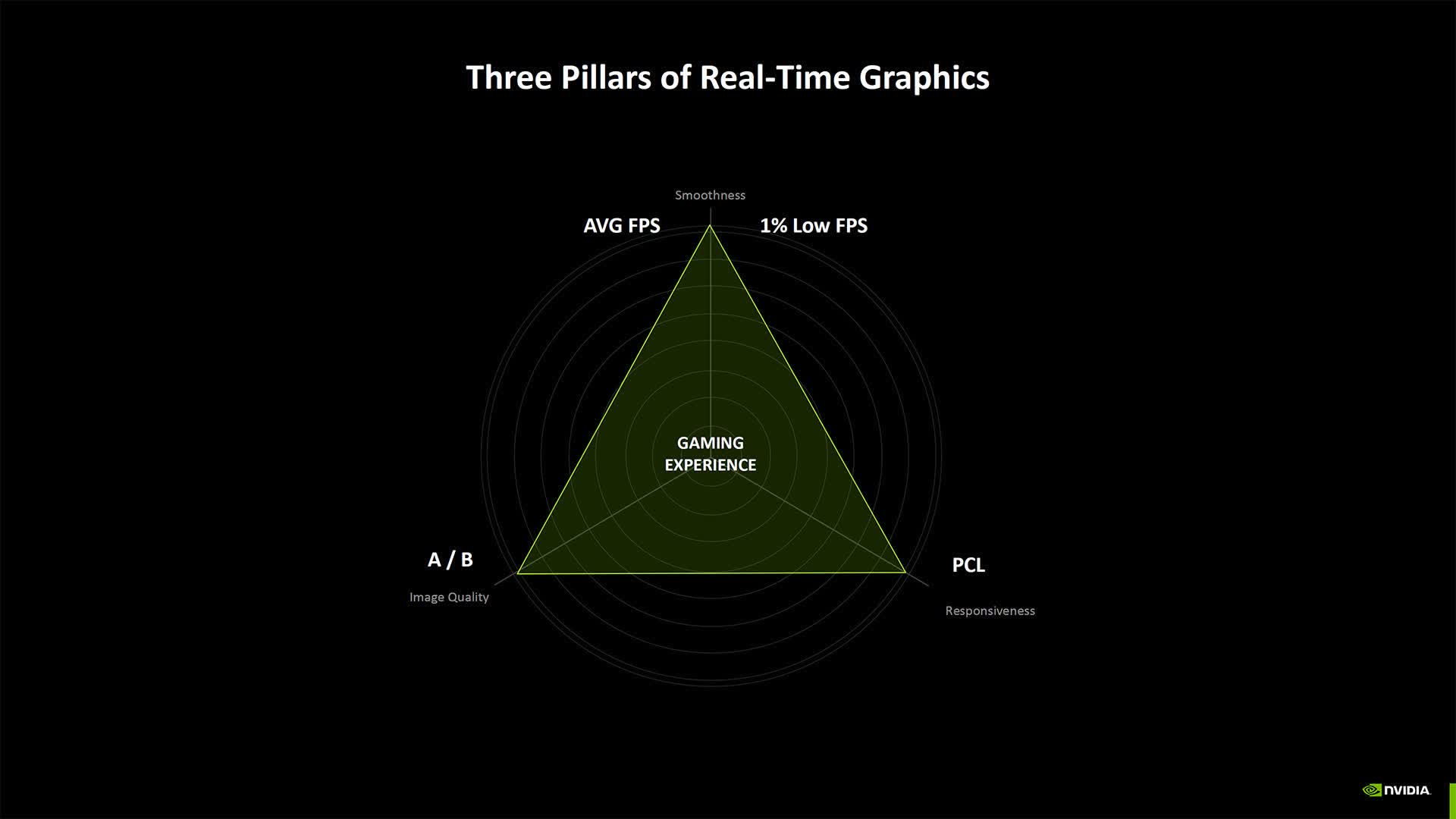

In context: PC gaming and graphics card benchmarks used to focus primarily on frame rates. Although average frame rates still draw the most attention, other factors like latency, 1% lows, upscaling, and frame generation have made performance analysis more complex. Nvidia's RTX 50 series GPUs will soon throw multi-frame rendering into the mix, and the company has suggestions (of course) for properly measuring its impact.

After unveiling its next-generation GPUs and the latest changes to DLSS upscaling at CES 2025, Nvidia is offering outlets some advice for analyzing how upcoming games perform on the new graphics cards. Although the company's presentation predictably paints the Blackwell cards in the best possible light, the newly introduced multi-frame rendering technology will undeniably require new approaches.

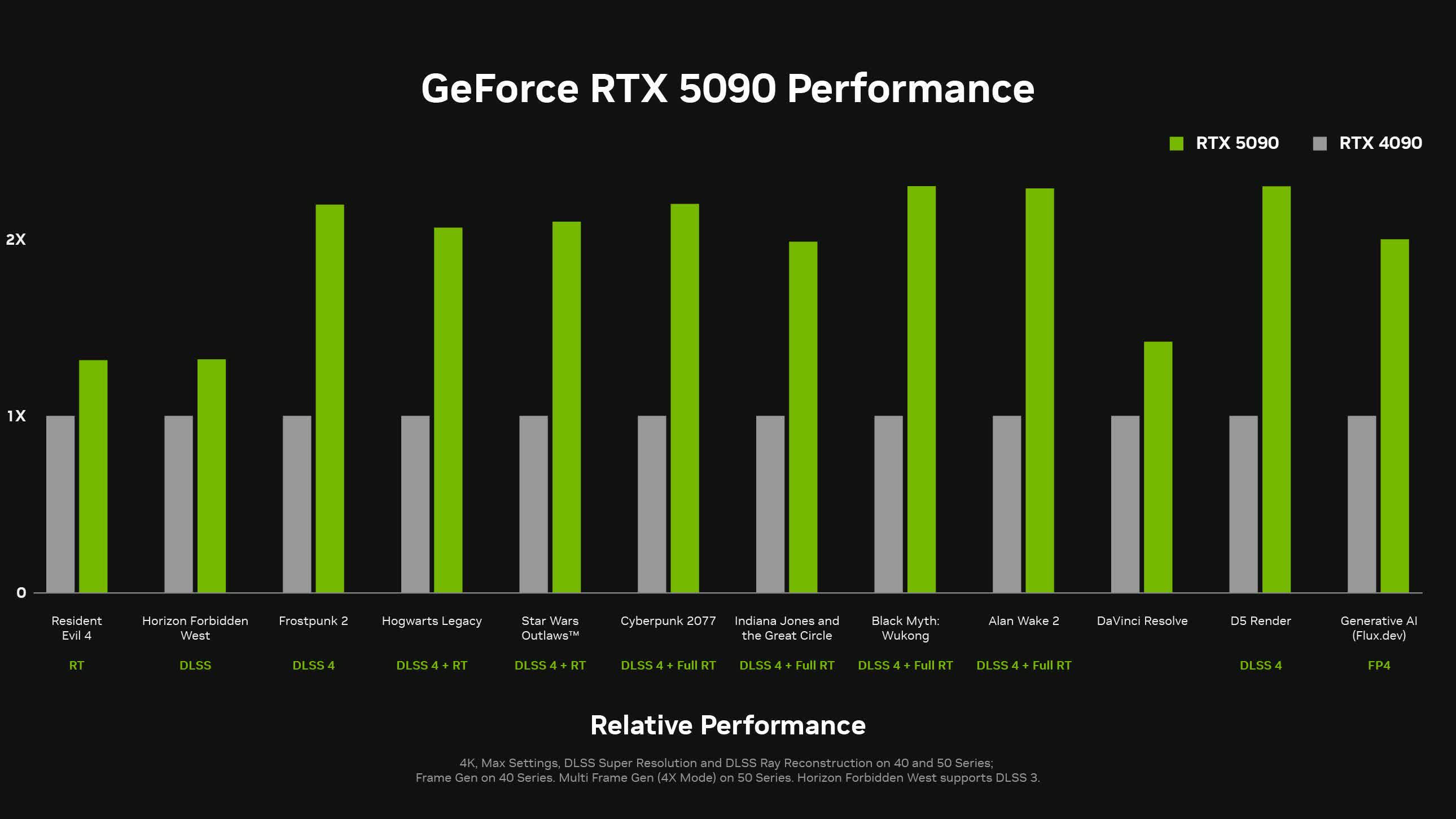

The introduction of DLSS and later AMD's FSR forced benchmarks to add bar charts accounting for the performance gains from upscaling. Image reconstruction has also made analyzing image quality more important.

Frame generation (see our feature: Nvidia DLSS 3: Fake Frames or Big Gains?), which applies an interpolated frame between every traditionally rendered frame, added another dimension to technical reviews. The technology makes image quality comparisons even more important, and examinations must now account for the latency it adds.

Multi-frame generation further complicates things by adding two or three interpolated frames using flip metering functionality. Nvidia says the new technology, exclusive to RTX 50 series GPUs, is necessary to maintain frame consistency – another crucial benchmarking factor.

Click to enlarge

Tom's Hardware reports that, in a CES presentation, the company advised outlets to switch from using the FrameView utility to MsBetweenDisplayChange as it can more accurately account for DLSS4, flip metering, and frame rate fluctuations.

When technical reviews are published in the coming weeks after the first RTX Blackwell cards launch in late January, fairly comparing their frame generation results to those of AMD GPUs could prove challenging, as FSR 3 still only produces one interpolated frame for every rendered frame.

A game running at 240fps using AI-generated frames might look smoother than the same game running at 120fps with fewer interpolated frames, or 60fps with only rendered frames, but all of those profiles might feel noticeably different.

Eurogamer's early analysis of Cyberpunk 2077 running with DLSS 4 showed that multi-frame rendering adds minimal latency and visual artifacts, but it remains unclear whether other titles will exhibit similar results.

Following CES, Nvidia has provided more in-depth information about DLSS 4 and how each 50 series card compares to its direct predecessor. Most of the charts use multi-frame generation to depict performance improvements ranging between 200 and 400 percent. However, the Resident Evil 4 Remake and Horizon Forbidden West, which haven't been updated to receive multi-frame rendering support, show far more modest (and more realistic) raw performance improvements of between 15 and 30 percent.

Nvidia says frame generation and upscaling will require us to rethink benchmarks