The big picture: Doom has been run on just about every piece of hardware imaginable. Now, a new project from Google uses the iconic game – and the meme surrounding its ubiquity – to showcase an alternative method of running game engines with AI. The developers suggest that this could be an early step toward "generating" interactive games from prompts.

Those who claim that generative AI will allow people to create films, TV shows, or interactive games entirely through prompts are often dismissed as grifters. However, a new engine from Google has recently demonstrated significant progress in interactive scene generation using the iconic first-person shooter Doom.

Modders have famously ported Doom to things like lawnmowers, Notepad, a milliwatt neural chip, Teletext, and other games. But in this latest experiment from a team from Google and Tel Aviv University called GameNGen has successfully generated a Doom level by leveraging a custom diffusion model based on Stable Diffusion which renders the game in real-time.

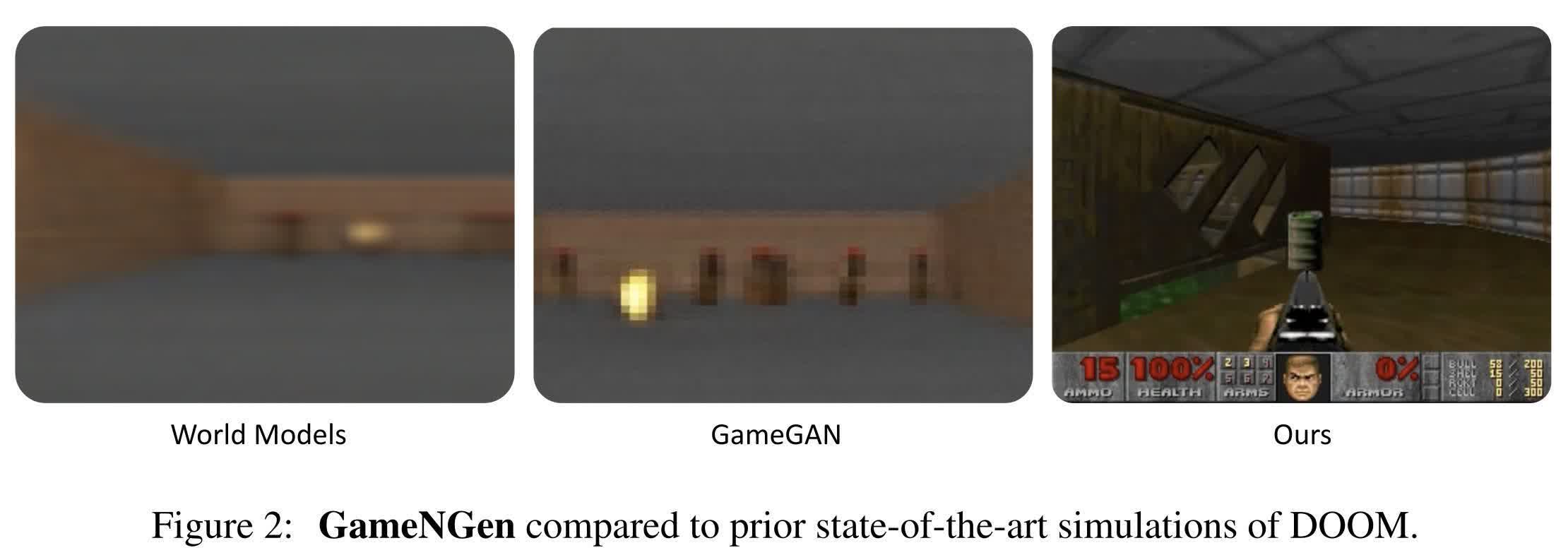

The result is far more complex than previous attempts to generate games from scratch using AI. GameNGen's reproduction of Doom runs at around 20 frames per second on a single tensor processing unit, maintaining image quality similar to the original 1993 version. Human observers comparing short clips of Doom with the AI-generated clone could only distinguish between them with slightly better accuracy than random guessing.

Moreover, GameNGen's model is fully interactive. Clips show that it understands Doom's basic rules regarding items, ammo, enemies, health, and keycard doors. However, minor visual glitches and the typical blur effect seen in AI-generated images are present. More significantly, logical glitches also occur.

For example, enemies may suddenly materialize in front of the player, and objects can reappear after being destroyed. According to the research paper, these inconsistencies arise because the AI can only remember the last three seconds of gameplay. Despite this limitation, it can infer certain details about the game state from the map and the player's status on the HUD. GameNGen's short memory is currently its main drawback.

Click to enlarge

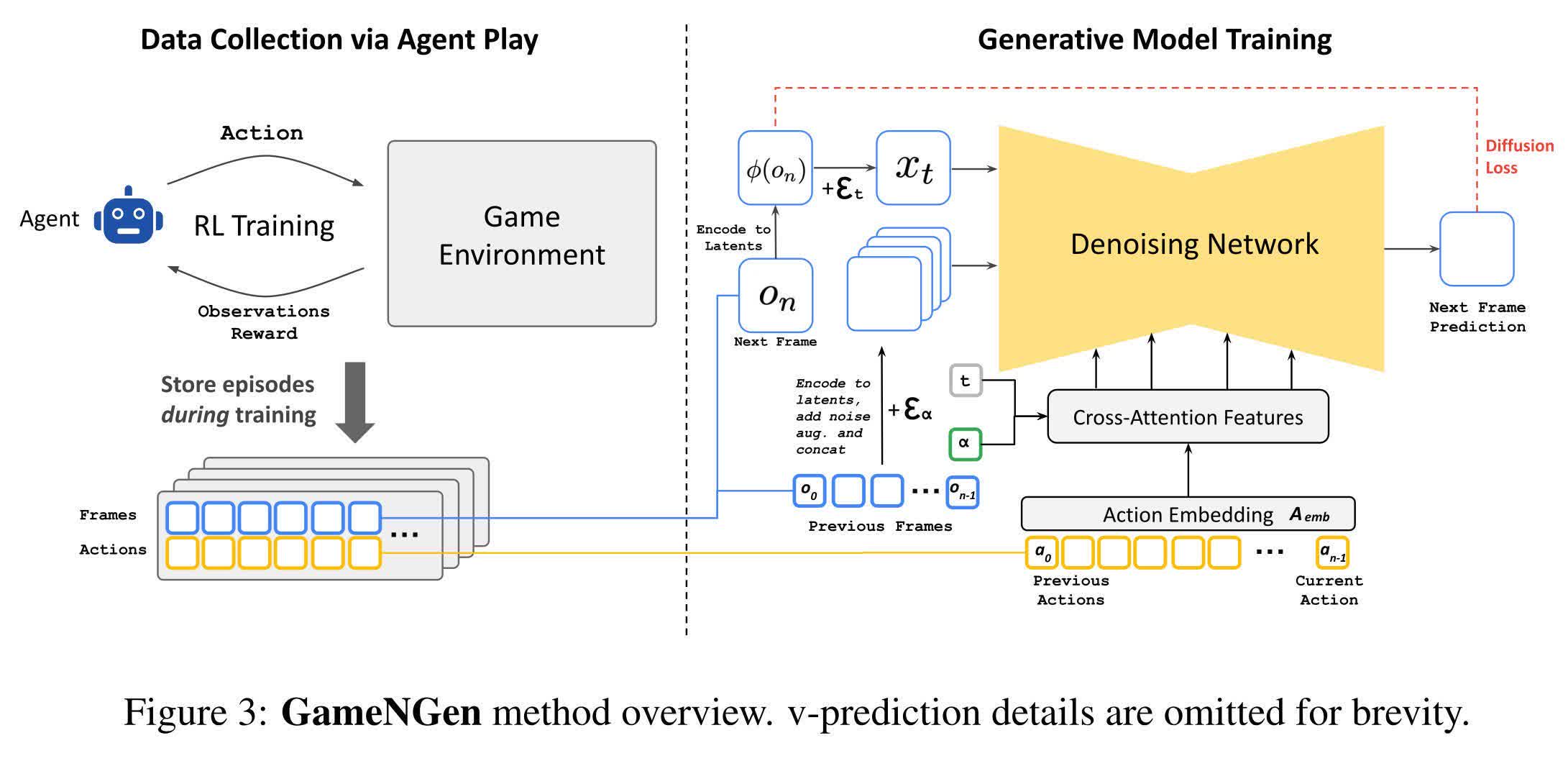

The researchers developed the engine by combining two separately trained programs. First, they trained a reinforcement learning agent to play Doom. Then, they trained a customized version of Stable Diffusion 1.4 on the actions and frames generated by the RL agent.

Game developers have recently started using generative AI for tasks like asset creation and concept development. However, the researchers suggest that technology like GameNGen could potentially allow for coding and editing games using textual and visual prompts.